Neet Bhagat

5 Minutes read

What It Took to Migrate a Monolithic EdTech App to Microservices on Azure in 9 Weeks

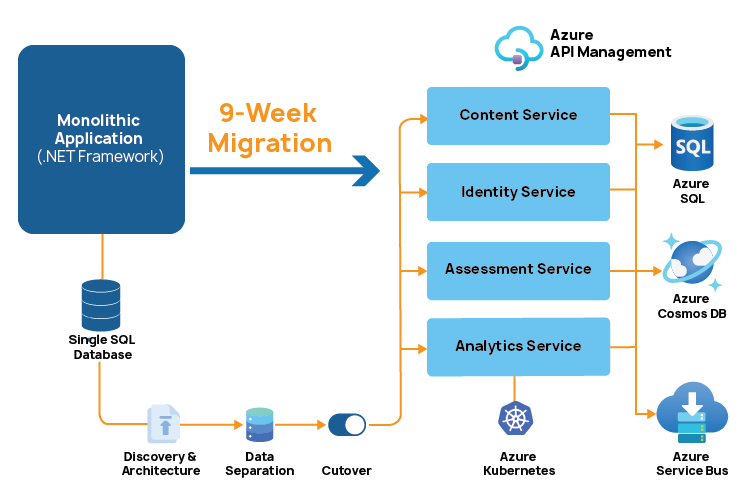

In the fast-moving EdTech market, speed to innovate is a competitive weapon. Our client — a growing online learning platform — was facing surging enrollments and demand for AI-assisted learning. Their legacy .NET Framework monolith was slowing them down: quarterly releases, scaling bottlenecks, and no path to integrate modern AI features.

They needed a modernization strategy that could be executed in record time without disrupting their active user base. The mandate: migrate from a tightly coupled .NET Framework monolith to a microservices-based, Azure-native architecture in just nine weeks — without downtime.

The challenge was clear: deliver scalability, AI-readiness, and faster releases without disrupting the active user base. Success hinged on precision planning, disciplined execution, and zero tolerance for downtime.

The Legacy Setup and Pain Points

Our audit revealed a single .NET Framework monolith powering thousands of concurrent learners — a structure we often see in platforms that have grown beyond their original design intent. While functional, it was showing signs of architectural fatigue, directly impacting innovation velocity and scalability.

- Rigid Release Model: All modules shared one deployment pipeline. Even a minor UI tweak triggered a full redeployment, increasing downtime risk and forcing a quarterly release cycle. We knew that introducing service isolation with containerized deployments on Azure Kubernetes Service (AKS) would be key to breaking this cycle.

- Scalability Constraints: The system scaled only vertically. During semester peaks, CPU usage spiked beyond 85%, putting SLA response times at risk. Our target design would shift to horizontal scaling with auto-scaling policies native to AKS and Azure App Services.

- Integration Roadblocks: Without an API-first design, integrating AI analytics engines or external LMS platforms meant custom workarounds. We planned for Azure API Management as a unified gateway to standardize integrations and enable faster partner onboarding.

- Maintenance Complexity: Tight module coupling created “dependency hell,” where a single library update risked breaking unrelated features. We identified domain-driven service decomposition as the foundation to remove these interdependencies.

- Data Bottlenecks: A single SQL database served all modules, creating contention during analytics-heavy workloads. Our blueprint called for separating operational and analytical data using Azure SQL for transactional workloads and Cosmos DB for high-volume analytics.

Defining the Migration Goals

From the assessment, we shaped modernization goals that solved immediate constraints while preparing the platform for AI-ready scalability. Each goal aligned to a known pain point and leveraged Azure-native managed services to minimize operational overhead:

- Service Independence: Decompose into domain-aligned microservices, deployed on Azure Kubernetes Service (AKS) with automated orchestration to enable independent releases.

- Cloud-Native Scalability: Use managed services like Azure App Services and AKS autoscaling to handle unpredictable peaks without manual intervention.

- API-First Architecture: Implement Azure API Management as the unified gateway, supporting secure, standardized integrations with AI engines and third-party LMS platforms.

- Automated CI/CD: Build Azure DevOps pipelines with automated testing, security scans, and blue-green deployments to deliver weekly or faster releases.

- Data Domain Separation: Split transactional and analytical workloads using Azure SQL and Cosmos DB, with Data Factory orchestrating ETL and ensuring performance isolation.

These goals became the foundation of our cloud migration blueprint, which we presented — and secured approval for — from the client’s leadership before moving into execution.

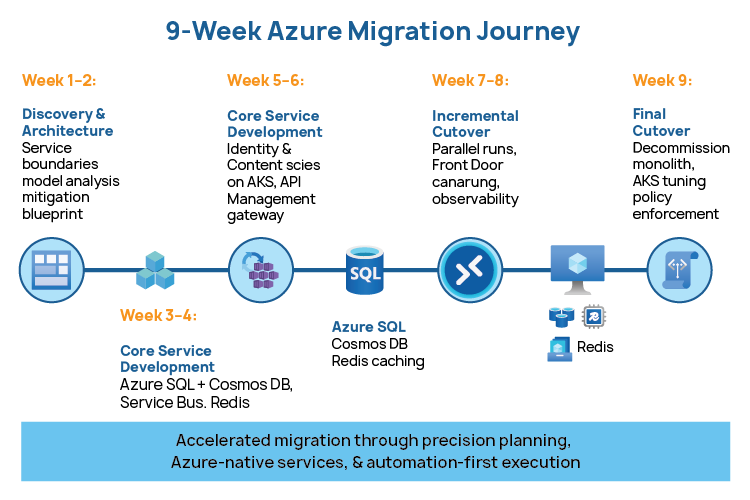

The 9-Week Migration Plan

Weeks 1–2: Discovery & Architecture

Certainly, Generative AI unequivocally has the potential to revolutionize DevOps practices. However, navigating its adoption comes with a set of obstacles that can be confidently overcome.

Data and Training Considerations

We began with domain-driven design workshops to map service boundaries for content delivery, assessments, analytics, and authentication. Our architects analyzed existing data models and performance telemetry, identifying where domain separation would yield immediate scalability.

- Automation Insight: We leveraged Azure DevOps Boards for backlog tracking and Azure Blueprints to predefine governance, compliance, and security baselines.

- Key Deliverable: A Technical Migration Approach document — with architecture diagrams, service boundaries, migration sequences, and rollback strategies — presented to and approved by client leadership before development began.

Weeks 3–4: Core Service Development

We extracted User Identity & Access and Content Delivery as the first two microservices, deploying them into Azure Kubernetes Service (AKS) with Helm charts for repeatable deployments.

- Managed Services Used: Azure API Management stood up as the secure, unified entry layer for all requests.

- Automation Insight: Azure DevOps CI/CD pipelines integrated SonarCloud scans and security gates, ensuring no low-quality code reached staging.

Weeks 5–6: Data & Integration Layer

We migrated transactional data to Azure SQL and analytics workloads to Cosmos DB, implementing Azure Data Factory pipelines for ETL and synchronization.

- Orchestration: Event-driven integration via Azure Service Bus reduced coupling between services.

- Caching Layer: Azure Cache for Redis minimized query load on databases during peak usage.

- Automation Insight: Load-testing scripts in Azure Load Testing were triggered automatically post-deployment.

Weeks 7–8: Incremental Cutover

We ran both the monolith and microservices in parallel, routing a small percentage of traffic through Azure Front Door to the new services.

- Automation: Canary deployments in AKS ensured gradual exposure.

- Monitoring: Azure Monitor, App Insights, and OpenTelemetry instrumentation gave real-time insight into service health.

Week 9: Final Cutover & Hardening

We decommissioned remaining monolith components, optimized AKS auto-scaling rules, and implemented Azure Policy to enforce configuration baselines.

- Automation Insight: Final performance testing and failover drills were triggered through Azure DevOps pipelines.

- Outcome: Platform met latency SLAs under triple the prior peak load.

This phase-wise, automation-first approach ensured we met the nine-week deadline without sacrificing quality or stability — a non-negotiable in a live, student-facing environment.

Azure Services Stack — Modern & Consulting-Grade Choices

Our architecture choices were guided by three principles: scalability, manageability, and AI-readiness. Each service was selected after evaluating alternatives, with an eye toward minimizing operational overhead while enabling rapid innovation.

- Azure Kubernetes Service (AKS): Chosen for container orchestration, service isolation, and horizontal scaling. Helm charts ensured repeatable deployments. Alternatives like Azure Container Apps were evaluated but rejected for their limited control over node configurations.

- Azure API Management: Provided a centralized, secure gateway for all microservices, enabling versioning, throttling, and analytics. This was critical for future AI integrations without changing core services.

- Azure SQL + Cosmos DB: Split transactional and analytical workloads. Azure SQL handled OLTP operations, while Cosmos DB’s low-latency, global distribution supported analytics and personalization features.

- Azure Service Bus & Event Grid: Formed the backbone of the event-driven architecture, ensuring reliable, decoupled communication between services.

- Azure Cache for Redis: Reduced database load during high-traffic learning sessions.

- Azure Monitor + App Insights + OpenTelemetry: Delivered unified observability, enabling proactive issue resolution.

AI-Accelerated Migration — Time, Cost, and Quality Gains

Throughout the migration, we used AI-assisted tools to reduce manual effort and improve quality:

- Code Analysis & Refactoring: GitHub Copilot and Azure AI-assisted code review identified refactoring opportunities, cutting service extraction time by ~20%.

- Automated Test Generation: AI-generated unit and integration tests improved coverage early, reducing post-cutover defects.

- Log & Telemetry Anomaly Detection: Azure Machine Learning models flagged abnormal API latencies during canary deployments, enabling quicker root-cause fixes.

This AI augmentation didn’t replace engineering expertise — it amplified it, delivering the migration faster, with fewer defects, and at a lower overall engineering cost.

Outcomes Achieved

The migration delivered measurable gains across performance, release agility, and AI readiness:

- Release Velocity: Quarterly releases moved to weekly, supported by automated CI/CD and AI-assisted test generation — reducing post-deployment defects and accelerating feature rollout.

- Scalability: The platform now supports 3× previous peak concurrency with sub-250ms average latency, enabling AI-driven features like real-time engagement analytics without performance degradation.

- Reliability: Mean Time to Recovery (MTTR) reduced by 60% through granular service-level deployments, AI-driven log anomaly detection, and unified observability.

- Stability: Deployment rollbacks dropped by 75%, thanks to canary releases, automated pre-deployment tests, and AI-based regression checks.

- Data & Analytics Foundation: Separated transactional and analytical workloads now feed into a scalable Azure Data Lake, unlocking future AI-powered personalization, adaptive learning, and predictive analytics.

- Cost Efficiency: Optimized autoscaling policies cut peak infrastructure spend by 18% without impacting SLAs.

These results were achieved without service disruption to thousands of active learners — positioning the platform to deliver AI-driven learning capabilities at enterprise scale.

Challenges and How We Solved Them

- Service Boundary Definition: The tightly coupled monolith blurred ownership of data and logic. We ran domain-driven design workshops with stakeholders, aligning service boundaries to business domains and validating them through proof-of-concept extractions.

- Data Consistency During Migration: Splitting databases risked temporary mismatches. We implemented a dual-write strategy with eventual consistency patterns, monitored by automated reconciliation scripts.

- Performance Risk in Parallel Runs: Running monolith and microservices side by side increased infrastructure complexity. Canary deployments with Azure Front Door routing minimized exposure and allowed real-time rollback.

- Change Fatigue in Development Teams: Rapid migration cycles strained engineering capacity. We mitigated this by using automation for builds, tests, and environment provisioning, freeing developers to focus on core service logic.

Each challenge became an opportunity to refine the migration approach and reduce delivery risk.

The Foundation for AI Features

Beyond resolving scalability and release bottlenecks, the new architecture created a foundation for AI-driven learning capabilities. Service decomposition and API-first design allow AI services to integrate without disrupting core workflows.

The separated analytical data layer in Azure Data Lake and Cosmos DB enables real-time learner engagement analytics, adaptive content recommendations, and predictive retention modeling. Event-driven data flows ensure AI models operate on fresh, high-quality data.

With standardized endpoints through Azure API Management, future integrations — such as Azure OpenAI-powered tutor bots or intelligent assessment scoring — can be deployed incrementally. This flexibility ensures the platform can evolve with emerging AI capabilities while maintaining enterprise-grade stability.

Key Takeaways

A nine-week migration to an Azure-native, microservices-based architecture isn’t just possible — it’s achievable with precise planning, managed services, and automation-first execution.

By removing architectural bottlenecks and enabling AI-ready integrations, we positioned the client to scale, innovate, and compete at the pace modern EdTech demands. For more details get in touch with our experts at business@acldigital.com.

Related Insights

ETL Simplified: Storing and Transforming Data Fully Inside Databricks

Unveiling Modern Data Analytics: How Kinetica AI Turns Motion Into Intelligence