Senthamizh Raj Thiruneelakandan

5 Minutes read

AI-Powered Personalization – How Devices Learn, Adapt, and Anticipate You

Ever noticed your phone cues a playlist before a workout or your watch switch to running mode as you start? That’s AI-powered personalization. In this blog, we’ll see how apps, wearables, and displays learn habits, sense context, predict needs, and stay seamless across devices — plus how companies like Spotify use it to keep us hooked.

Introduction – Moving Beyond “Smart” to Truly Intuitive

“Smart” once meant step counters and fixed reminders. Helpful, but rigid.

Now, AI makes devices anticipate needs. Watches detect runs, phones suggest camera modes, and apps quietly adjust layouts. That’s the shift: from “smart” to intuitive — experiences that feel personal, seamless, and invisible.

Adaptive Interfaces – Dynamic Layouts Powered by ML

Old “smart” devices just sent data to the cloud and waited. Today, ML runs on-device, adjusting layouts and flows in real-time.

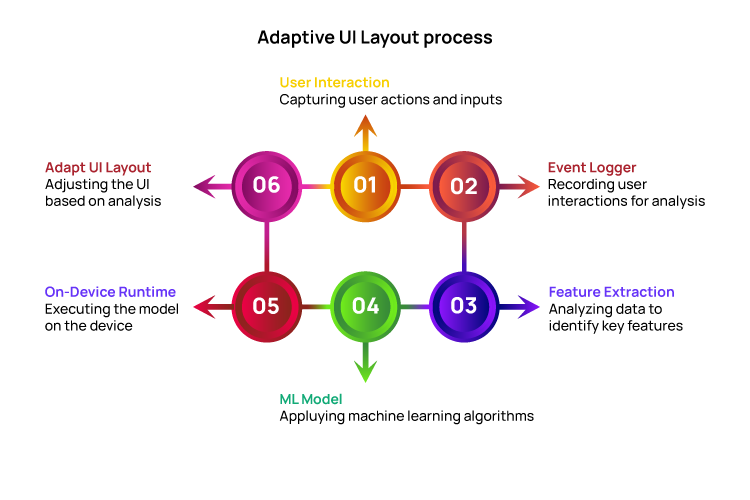

How it works

- Event Logging → Every tap, swipe, and skip is tracked.

- Feature Extraction → Signals like navigation effort, engagement, or input style emerge.

- Modeling → Algorithms cluster similar users, test new layouts (reinforcement learning), or balance between exploring new ideas vs. sticking with proven ones.

- On-Device ML → Tiny models (TensorFlow Lite, Core ML) make changes in milliseconds.

For the user, it feels like the app “gets you.” Buttons you use appear closer, visuals adapt for readability, and navigation feels faster without you having to hunt for settings.

Context-Aware Personalization – Sensor Fusion & Real-Time Adaptation

Your devices are sensor-packed: GPS, accelerometers, heart rate, gyroscopes. Instead of just logging data, AI fuses it to infer context.

The Tech Stack: Sensors, Models, and Context Engines

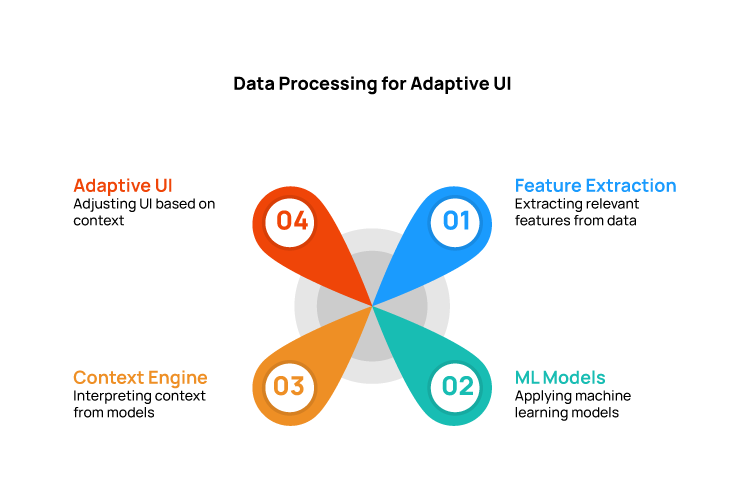

Personalization doesn’t come from thin air — it’s a layered system working behind the scenes:

- Sensors capture raw signals.

- Feature Layer translates them (e.g., “fast walking,” “low light”).

- Models predict what you’ll need.

- Context Engine decides when and how to adapt.

- Delivery Layer updates the UI.

Everyday Impact: Apps That “Understand” the Situation

This is where personalization feels human. Fitness apps jump to “intense mode” when your heart rate spikes. Maps suggest you with faster routes if you’re driving. Wearables dim screens at night. It’s not random — it’s prediction + context, making apps feel almost companion-like.

Predictive Engagement – Anticipating Next Actions

Personalization isn’t just “what to watch.” AI now predicts what you’ll do.

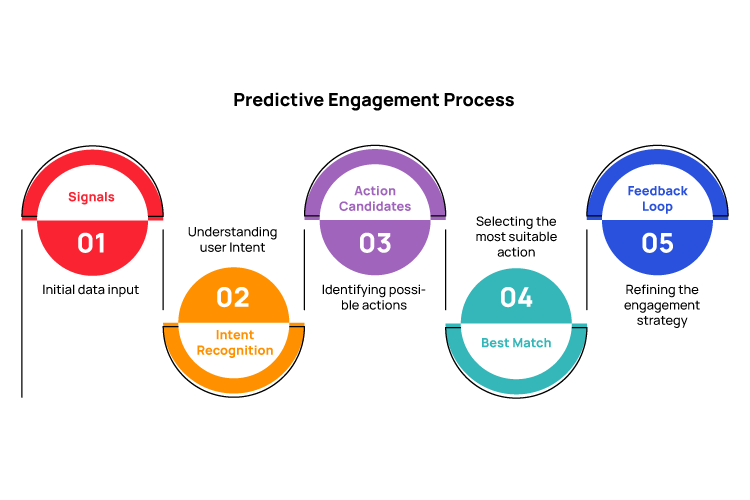

Inside the Pipeline: From Intent Recognition to Action Triggers

Think of predictive engagement as your device for learning to read the room. Here’s how it plays out behind the scenes:

- Signals → time, location, habits. (“It’s 7 PM, and you’re at the gym.”)

- Intent Guessing → “Workout mode incoming.”

- Action Options → maybe music, maybe fitness tracker.

- Trigger → Nudges with the best match before you ask.

- Learning → Every accept or skip fine-tunes the system.

From the user’s side, it feels like an assistant finishing your sentences.

Cross-Device Continuity – Ecosystem-Level Personalization

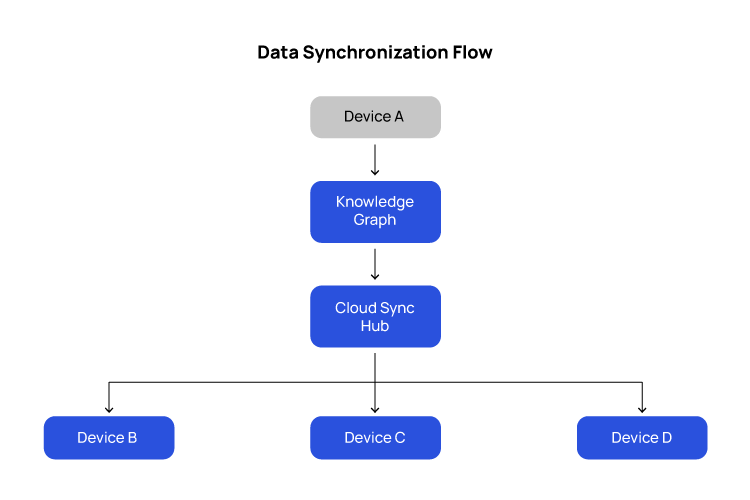

We don’t live on one device anymore. Phones, watches, cars, TVs — AI ties them together.

Behind the scenes:

- Knowledge Graphs link your habits: “User likes podcasts in the morning → usually in car → suggest podcast app first.”

- Cloud Sync shares context across devices. Start an email on a tablet, finish on a laptop, and review on your phone.

On the surface: start a playlist on your phone, continue it on your watch, and pick it up in your car — no logins, no starting over.

Benefits and Trade-Offs:

- Benefits: smooth flow, consistency, sense of ownership across devices.

- Trade-offs: feels spooky at times, raises privacy questions, and can break if one device fails.

Privacy & Ethics – Building Trust into AI Personalization

Personalization is powerful, but without trust, it breaks.

Safeguards:

- On-Device ML → Phones learn locally, not by sending raw data to servers. Faster, safer, less creepy.

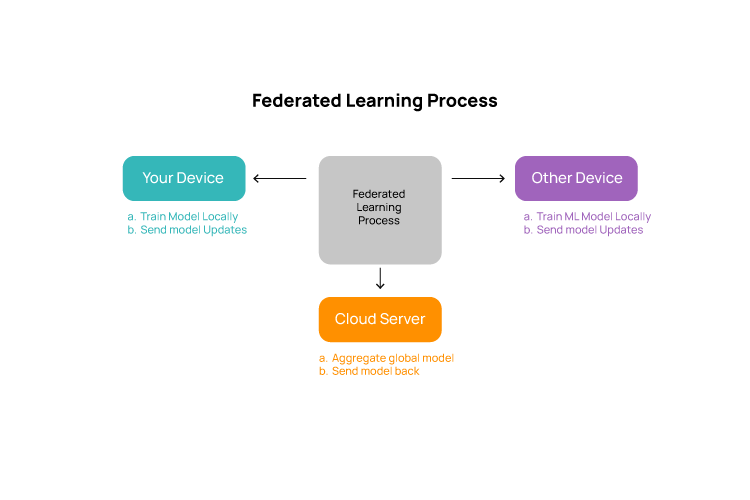

- Federated Learning → Devices learn individually, then share only insights — like joining a study group without revealing personal notes.

User expectations:

- Control → Give users toggles and modes.

- Transparency → Explain changes (“Suggested because you play jazz at night”).

- Choice → Let them opt in, not struggle to opt out.

AI should feel like a friendly assistant, not a pushy roommate.

Quick Case Study – Spotify’s Personalization Pipeline

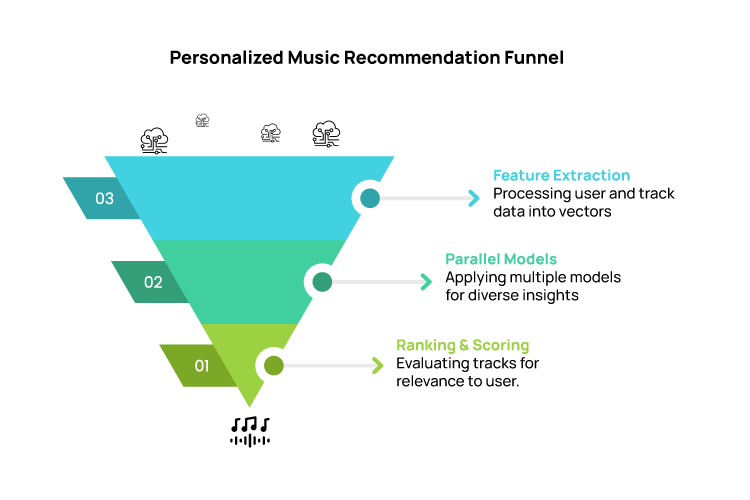

Spotify’s “magic” is an ML orchestra:

- Collaborative Filtering → Crowd wisdom: “people like you also liked…”

- Content-Based Filtering → Audio analysis with CNNs on spectrograms.

- Contextual Models → Factoring in time, device, or activity.

- Reinforcement Learning → Every skip or save is real-time feedback.

All of this takes place in a giant embedding space where users and songs are represented as points; closeness means a better match.

Impact on users:

Spotify doesn’t just mirror taste, it shapes it. Starts safe with favorites, sprinkles in similar new tracks, then tests your “risk tolerance.” Skip or save — the system adapts. That’s why it feels like Spotify just gets you.

Conclusion – Balancing Sophistication with Human Experience

AI now powers devices that predict, adapt, sync, and even shape our habits. Impressive? Definitely.

The real win is when personalization gives control, clarity, and trust. Big players like Spotify, Netflix, Apple proves it works, but smaller apps can adopt modular ML services too.

The next step is simple — if you’re building something new, think about how AI can make it feel more personal. And if you’d like a hand, we’re here to help.

Related Insights

How Generative AI is Transforming Project Risk Management

Death to Prompting! Long Live Programming!