Dipali Pawar

5 Minutes read

Prompting techniques for QA Engineers

With AI getting so much attention and slowly becoming an integral part of day-to-day work across all software roles — including QA — it’s clear we can’t stay on the sidelines. We have to embrace it and master it.

Just a couple of years back, AI was for specialized users. However, with the rise of Gen AI, it is no longer limited to them. That said, even with the ease GenAI brings, one thing hasn’t changed: to truly harness its power, we need to master the art of writing good prompts — that’s Prompt Engineering.

For QAs, this skill is not entirely new. Asking the right questions has always been at the heart of our role. The only difference in the AI era is the format. And that’s exactly what sits at the core of Prompt Engineering.

Why is it so important, and how do we build it? Let’s explore.

Before Prompting: Key LLM Parameters That Shape Responses

Before diving into prompting, it’s important to understand a few important LLM settings that influence the output of LLM-based applications.

Most important among these are:

- Temperature – Value for this parameter ranges from 0 – 1. Lower value makes result more deterministic, as the model picks the most probable tokens. For more creative, non-deterministic results, higher value of temperature works.

- Top P – If you want precise and reliable answers, set the value lower. If you prefer a wide range of creative or varied responses, raise it higher. It is usually best to adjust either temperature or Top P, not both at the same time.

- Max Length – The number of tokens the model produces can be controlled by setting the maximum output length. It helps to prevent long or irrelevant responses and control costs.

- Stop Sequences – A string that tells the model where to stop generating tokens.

Why Prompts are important and how they relate to QA work

For QA use cases like test plan creation, test case generation, or automation script generation, we naturally process a lot of context and rely on our experience and domain knowledge.

Ever wondered, what if we just eliminate this valuable inputs/ context and try to produce test artifacts like strategy, plan, tests etc.?

Yes, you guessed it right, we will not get quality output for test artifacts from human. Same is the case with any GenAI based tool or LLM.

In order to be concise in what we want to input to the LLM, we need to understand how prompts work? And different approaches of prompting.

As we all know, single size doesn’t fit all, similarly, single prompting technique is not going to help us with all QA tasks.

The most common elements for the prompt irrespective of the technique are:

- Task – what you want the model to do or solve.

- Background – useful details or references that guide the model’s response.

- Prompt – the question you ask the model.

- Result format – how you would like the answer to be structured or presented.

Prompting Techniques for QA Engineers

These techniques are simple to start with and effective for day-to-day QA task.

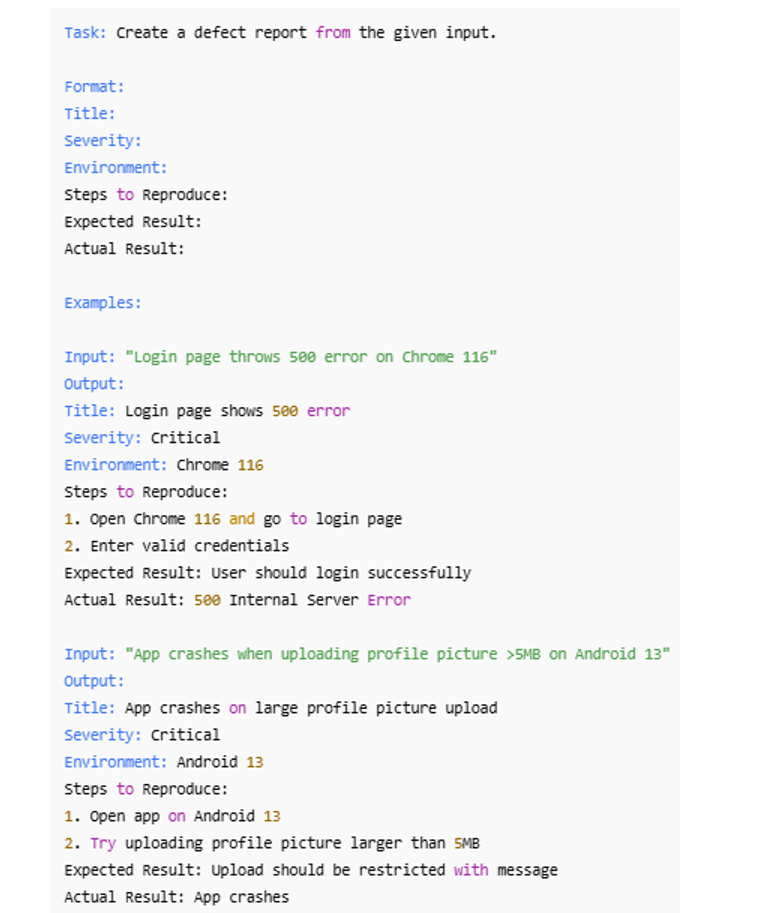

1. Few shot Prompting

One of the techniques typically to start with is few-shot prompting.

Here we provide the samples of inputs and the output expected as per the inputs. Basically, like input -> output pairs.

This technique is best suited when we know the input and expected output formats very well, they are limited in numbers, they are more deterministic in nature.

For example,

– Creating a defect in the given format from the input information provided.

2. Chain of Thought (CoT) Prompting

Chain-of-thought (CoT) prompting enables complex reasoning through intermediate reasoning steps. It’s most suitable for complex tasks where reasoning is important.

When applied correctly, CoT can significantly boost QA productivity in day-to-day activities.

As QAs, we often deal with reasoning-heavy tasks, for example, analyzing requirements to identify corner cases during test development. In such cases, we naturally apply step-by-step thinking: reviewing requirements, walking through workflows from a user perspective, and then analyzing potential corner cases.

CoT works in the same way. By simply adding the phrase “step-by-step” to your prompt, you can guide the model to produce detailed and accurate outputs for QA tasks.

Example: Test case design

Prompt:

“For each requirement, reason step by step about valid inputs, invalid inputs, boundary conditions, and user error scenarios. Then generate detailed test cases with preconditions, steps, and expected results.”

This prompt will deliver far better results than simply asking to “list down test cases”.

Other areas where CoT can be applied include test plan design, risk identification, and generating test closure reports with reasoning based on the factual data available from test artifacts.

3. Self Consistency

This is one of the more advanced prompting techniques which works by generating multiple diverse reasoning paths for the same problem and then selecting the most consistent or common answer. This approach ensures more reliable and consistent QA results and is particularly valuable in test decision-making.

It is especially helpful when dealing with vague requirements. By using self-consistency, you can generate multiple interpretations of a requirement (exploring different reasoning paths) and then consolidate the most common understand. This provides stronger, more valuable outputs while also highlighting ambiguities that need clarification.

Example: Identify vague requirements from the given requirement documents

Prompt:

“Generate three different reasoning paths about possible interpretations of this requirement. Then, consolidate the most consistent interpretation and highlight ambiguities that need clarification.”

4. Prompt Chaining

Detailed, very elaborated and large prompts should give accurate answers, right?

Not necessarily, as each LLM has a limited token size. So, how can we pass a lot of contexts or information to an LLM, or how can we extract and refine information effectively?

The answer to this problem is Prompt Chaining, where output of one prompt is used as input to the next prompt. In prompt chaining, chain prompts perform transformations or additional processes on the generated responses before reaching a final desired state. This helps to process large size of inputs and at the same time getting the desired output.

Example: Building regression coverage from exploratory testing sessions.

Chain Steps:

- Capture observations during exploratory session. (Prompt-1)

- Transform them into structured “test ideas.” (Prompt-2 with input as output from Prompt-1)

- Expand into proper test cases for the regression suite. (Prompt-3 with input as output from Prompt-2)

Prompt chaining works best for tasks that are complex, multi-step, or need refinement.

5. Tree of Thoughts

Tree of Thoughts (ToT) generalizes over chain-of-thought prompting and is helpful when you want to explore multiple reasoning paths in parallel. This framework explores multiple reasoning paths as a tree, where each path is evaluated at each step and the least likely ones are omitted.

Core Principles of ToT-

- Branching: At each reasoning step, generate multiple possible “thoughts” instead of just one.

- Evaluation: Assess the likelihood of each branch (e.g., “Is this defect cause plausible?”).

- Pruning: Keep the promising paths and discard the unlikely ones.

- Exploration Depth: Continue reasoning down multiple paths until reaching the final answers.

Let’s take an example of identification and prioritizing areas as part of risk based test planning. ToT can process the given requirements in multiple branches and provide broader coverage.

Test Planning → Risk Analysis & Prioritization

How ToT Helps:

For risk-based testing, multiple “risk trees” can be explored: performance bottlenecks, integration risks, security loopholes.

Example Prompt:

“Branch into separate reasoning paths for performance risks, security risks, and integration risks in this module. Then compare and rank which risks need higher testing priority.”

Conclusion

These prompting techniques are relatively easy to begin with, though they are not an exhaustive list.

Few-shot, chain-of-thought, self-consistency, prompt chaining, and tree of thoughts — each technique has its place in our day-to-day testing.

The goal is not to master them all at once, but to start small, experiment, and continuously refine. With the right prompts, AI won’t replace our role — it will only strengthen it.

Related Insights

How AI-Based Switches Are Redefining Connectivity?

Prompting techniques for QA Engineers